Type tf

Namespace tensorflow

Bring in all of the public TensorFlow interface into this module.

Methods

- a

- a_dyn

- abs

- abs_dyn

- accumulate_n

- accumulate_n

- accumulate_n

- accumulate_n

- accumulate_n_dyn

- acos

- acos_dyn

- acosh

- acosh_dyn

- add

- add

- add_check_numerics_ops

- add_check_numerics_ops_dyn

- add_dyn

- add_n

- add_n

- add_n_dyn

- add_to_collection

- add_to_collection

- add_to_collection

- add_to_collection

- add_to_collections

- add_to_collections

- adjust_hsv_in_yiq

- adjust_hsv_in_yiq_dyn

- all_variables

- all_variables_dyn

- angle

- angle_dyn

- arg_max

- arg_max

- arg_max_dyn

- arg_min

- arg_min

- arg_min_dyn

- argmax

- argmax

- argmax

- argmax

- argmax

- argmax

- argmax

- argmax

- argmax

- argmax

- argmax

- argmax

- argmax_dyn

- argmin

- argmin

- argmin

- argmin

- argmin

- argmin

- argmin

- argmin

- argmin

- argmin

- argmin

- argmin

- argmin_dyn

- argsort

- argsort

- argsort

- argsort

- argsort

- argsort_dyn

- as_dtype

- as_dtype

- as_dtype_dyn

- as_string

- as_string_dyn

- asin

- asin_dyn

- asinh

- asinh_dyn

- Assert

- Assert

- Assert

- Assert

- Assert

- Assert

- Assert

- Assert

- Assert

- Assert

- Assert

- Assert

- Assert

- Assert

- Assert

- Assert

- Assert

- Assert

- Assert

- Assert

- Assert

- Assert

- Assert

- Assert

- Assert_dyn

- assert_equal

- assert_equal

- assert_equal

- assert_equal

- assert_equal

- assert_equal

- assert_equal

- assert_equal

- assert_equal

- assert_equal

- assert_equal_dyn

- assert_greater

- assert_greater

- assert_greater

- assert_greater

- assert_greater

- assert_greater

- assert_greater

- assert_greater

- assert_greater

- assert_greater

- assert_greater_dyn

- assert_greater_equal

- assert_greater_equal

- assert_greater_equal

- assert_greater_equal

- assert_greater_equal

- assert_greater_equal

- assert_greater_equal

- assert_greater_equal

- assert_greater_equal

- assert_greater_equal

- assert_greater_equal_dyn

- assert_integer

- assert_integer

- assert_integer_dyn

- assert_less

- assert_less

- assert_less

- assert_less

- assert_less

- assert_less

- assert_less

- assert_less

- assert_less

- assert_less

- assert_less

- assert_less

- assert_less

- assert_less

- assert_less

- assert_less

- assert_less_dyn

- assert_less_equal

- assert_less_equal

- assert_less_equal_dyn

- assert_near

- assert_near

- assert_near_dyn

- assert_negative

- assert_negative

- assert_negative_dyn

- assert_non_negative

- assert_non_negative

- assert_non_negative

- assert_non_negative

- assert_non_negative

- assert_non_negative

- assert_non_negative_dyn

- assert_non_positive

- assert_non_positive

- assert_non_positive_dyn

- assert_none_equal

- assert_none_equal

- assert_none_equal

- assert_none_equal

- assert_none_equal

- assert_none_equal

- assert_none_equal

- assert_none_equal

- assert_none_equal

- assert_none_equal

- assert_none_equal

- assert_none_equal

- assert_none_equal

- assert_none_equal

- assert_none_equal_dyn

- assert_positive

- assert_positive

- assert_positive_dyn

- assert_proper_iterable

- assert_proper_iterable

- assert_proper_iterable

- assert_proper_iterable

- assert_proper_iterable

- assert_proper_iterable

- assert_proper_iterable

- assert_proper_iterable_dyn

- assert_rank

- assert_rank

- assert_rank

- assert_rank

- assert_rank

- assert_rank

- assert_rank

- assert_rank

- assert_rank_at_least

- assert_rank_at_least

- assert_rank_at_least

- assert_rank_at_least

- assert_rank_at_least

- assert_rank_at_least

- assert_rank_at_least_dyn

- assert_rank_dyn

- assert_rank_in

- assert_rank_in

- assert_rank_in

- assert_rank_in

- assert_rank_in

- assert_rank_in

- assert_rank_in

- assert_rank_in

- assert_rank_in

- assert_rank_in

- assert_rank_in_dyn

- assert_same_float_dtype

- assert_same_float_dtype

- assert_same_float_dtype

- assert_same_float_dtype_dyn

- assert_scalar

- assert_scalar_dyn

- assert_type

- assert_type

- assert_type_dyn

- assert_variables_initialized

- assert_variables_initialized_dyn

- assign

- assign

- assign_add

- assign_add

- assign_add

- assign_add

- assign_add_dyn

- assign_dyn

- assign_sub

- assign_sub

- assign_sub

- assign_sub

- assign_sub_dyn

- atan

- atan_dyn

- atan2

- atan2_dyn

- atanh

- atanh_dyn

- attr

- attr_bool

- attr_bool_dyn

- attr_bool_list

- attr_bool_list_dyn

- attr_default

- attr_default_dyn

- attr_dyn

- attr_empty_list_default

- attr_empty_list_default_dyn

- attr_enum

- attr_enum_dyn

- attr_enum_list

- attr_enum_list_dyn

- attr_float

- attr_float_dyn

- attr_list_default

- attr_list_default_dyn

- attr_list_min

- attr_list_min_dyn

- attr_list_type_default

- attr_list_type_default_dyn

- attr_min

- attr_min_dyn

- attr_partial_shape

- attr_partial_shape_dyn

- attr_partial_shape_list

- attr_partial_shape_list_dyn

- attr_shape

- attr_shape_dyn

- attr_shape_list

- attr_shape_list_dyn

- attr_type_default

- attr_type_default_dyn

- audio_microfrontend

- audio_microfrontend

- audio_microfrontend

- audio_microfrontend

- audio_microfrontend

- audio_microfrontend

- audio_microfrontend

- audio_microfrontend

- audio_microfrontend

- audio_microfrontend

- audio_microfrontend

- audio_microfrontend

- audio_microfrontend

- audio_microfrontend

- audio_microfrontend

- audio_microfrontend

- audio_microfrontend_dyn

- b

- b_dyn

- batch_gather

- batch_gather

- batch_gather

- batch_gather

- batch_gather

- batch_gather

- batch_gather

- batch_gather

- batch_gather

- batch_gather

- batch_gather

- batch_gather

- batch_gather

- batch_gather

- batch_gather

- batch_gather

- batch_gather

- batch_gather

- batch_gather

- batch_gather

- batch_gather_dyn

- batch_scatter_update

- batch_scatter_update

- batch_scatter_update

- batch_scatter_update

- batch_scatter_update

- batch_scatter_update

- batch_scatter_update

- batch_scatter_update

- batch_scatter_update

- batch_scatter_update_dyn

- batch_to_space

- batch_to_space_dyn

- batch_to_space_nd

- batch_to_space_nd_dyn

- betainc

- betainc_dyn

- binary

- binary_dyn

- bincount

- bincount_dyn

- bipartite_match

- bipartite_match_dyn

- bitcast

- bitcast_dyn

- boolean_mask

- boolean_mask

- boolean_mask_dyn

- broadcast_dynamic_shape

- broadcast_dynamic_shape

- broadcast_dynamic_shape

- broadcast_dynamic_shape

- broadcast_dynamic_shape

- broadcast_dynamic_shape

- broadcast_dynamic_shape

- broadcast_dynamic_shape

- broadcast_dynamic_shape

- broadcast_dynamic_shape

- broadcast_dynamic_shape

- broadcast_dynamic_shape

- broadcast_dynamic_shape_dyn

- broadcast_static_shape

- broadcast_static_shape

- broadcast_static_shape

- broadcast_static_shape

- broadcast_static_shape

- broadcast_static_shape

- broadcast_static_shape

- broadcast_static_shape

- broadcast_static_shape

- broadcast_static_shape

- broadcast_static_shape

- broadcast_static_shape

- broadcast_static_shape

- broadcast_static_shape

- broadcast_static_shape

- broadcast_static_shape

- broadcast_static_shape_dyn

- broadcast_to

- broadcast_to_dyn

- bucketize_with_input_boundaries

- bucketize_with_input_boundaries_dyn

- build_categorical_equality_splits

- build_categorical_equality_splits_dyn

- build_dense_inequality_splits

- build_dense_inequality_splits_dyn

- build_sparse_inequality_splits

- build_sparse_inequality_splits_dyn

- bytes_in_use

- bytes_in_use_dyn

- bytes_limit

- bytes_limit_dyn

- case

- case

- case

- case

- case

- case

- case_dyn

- cast

- cast

- cast

- cast

- cast

- cast

- cast

- cast

- cast

- cast

- cast

- cast

- cast

- cast

- cast

- cast

- cast_dyn

- cast<T>

- ceil

- ceil_dyn

- center_tree_ensemble_bias

- center_tree_ensemble_bias_dyn

- check_numerics

- check_numerics_dyn

- cholesky

- cholesky_dyn

- cholesky_solve

- cholesky_solve_dyn

- clip_by_average_norm

- clip_by_average_norm

- clip_by_average_norm_dyn

- clip_by_global_norm

- clip_by_global_norm

- clip_by_global_norm

- clip_by_global_norm

- clip_by_global_norm_dyn

- clip_by_norm

- clip_by_norm

- clip_by_norm

- clip_by_norm

- clip_by_norm

- clip_by_norm

- clip_by_norm

- clip_by_norm

- clip_by_norm

- clip_by_norm

- clip_by_norm

- clip_by_norm

- clip_by_norm

- clip_by_norm

- clip_by_norm

- clip_by_norm

- clip_by_norm

- clip_by_norm

- clip_by_norm

- clip_by_norm

- clip_by_norm

- clip_by_norm_dyn

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value

- clip_by_value_dyn

- complex

- complex_dyn

- complex_struct

- complex_struct_dyn

- concat

- concat

- concat_dyn

- cond

- cond

- cond

- cond

- cond

- cond

- cond

- cond

- cond

- cond

- cond

- cond

- cond

- cond

- cond

- cond

- cond_dyn

- confusion_matrix

- confusion_matrix_dyn

- conj

- conj

- conj

- conj_dyn

- constant

- constant

- constant

- constant_dyn

- constant_scalar<T>

- constant<T>

- constant<T>

- constant<T>

- constant<T>

- constant<T>

- constant<T>

- constant<T>

- container

- container_dyn

- control_dependencies

- control_dependencies

- control_dependencies_dyn

- control_flow_v2_enabled

- control_flow_v2_enabled_dyn

- convert_to_tensor

- convert_to_tensor

- convert_to_tensor

- convert_to_tensor

- convert_to_tensor

- convert_to_tensor

- convert_to_tensor

- convert_to_tensor

- convert_to_tensor

- convert_to_tensor

- convert_to_tensor

- convert_to_tensor

- convert_to_tensor

- convert_to_tensor

- convert_to_tensor

- convert_to_tensor

- convert_to_tensor_dyn

- convert_to_tensor_or_indexed_slices

- convert_to_tensor_or_indexed_slices_dyn

- convert_to_tensor_or_sparse_tensor

- convert_to_tensor_or_sparse_tensor

- convert_to_tensor_or_sparse_tensor_dyn

- copy_op

- copy_op_dyn

- cos

- cos_dyn

- cosh

- cosh_dyn

- count_nonzero

- count_nonzero

- count_nonzero

- count_nonzero

- count_nonzero

- count_nonzero

- count_nonzero

- count_nonzero

- count_nonzero

- count_nonzero

- count_nonzero

- count_nonzero

- count_nonzero_dyn

- count_up_to

- count_up_to

- count_up_to

- count_up_to_dyn

- create_fertile_stats_variable

- create_fertile_stats_variable_dyn

- create_partitioned_variables

- create_partitioned_variables

- create_partitioned_variables

- create_partitioned_variables

- create_partitioned_variables

- create_partitioned_variables

- create_partitioned_variables_dyn

- create_quantile_accumulator

- create_quantile_accumulator

- create_quantile_accumulator_dyn

- create_stats_accumulator_scalar

- create_stats_accumulator_scalar_dyn

- create_stats_accumulator_tensor

- create_stats_accumulator_tensor_dyn

- create_tree_ensemble_variable

- create_tree_ensemble_variable_dyn

- create_tree_variable

- create_tree_variable_dyn

- cross

- cross_dyn

- cumprod

- cumprod_dyn

- cumsum

- cumsum_dyn

- custom_gradient

- custom_gradient_dyn

- decision_tree_ensemble_resource_handle_op

- decision_tree_ensemble_resource_handle_op_dyn

- decision_tree_resource_handle_op

- decision_tree_resource_handle_op_dyn

- decode_base64

- decode_base64_dyn

- decode_compressed

- decode_compressed_dyn

- decode_csv

- decode_csv_dyn

- decode_json_example

- decode_json_example_dyn

- decode_libsvm

- decode_libsvm_dyn

- decode_raw

- decode_raw

- decode_raw

- decode_raw

- decode_raw_dyn

- default_attrs

- default_attrs_dyn

- delete_session_tensor

- delete_session_tensor_dyn

- depth_to_space

- depth_to_space

- depth_to_space

- depth_to_space

- depth_to_space_dyn

- dequantize

- dequantize_dyn

- deserialize_many_sparse

- deserialize_many_sparse_dyn

- device

- device

- device_dyn

- device_placement_op

- device_placement_op_dyn

- diag

- diag_dyn

- diag_part

- diag_part_dyn

- digamma

- digamma_dyn

- dimension_at_index

- dimension_at_index

- dimension_at_index

- dimension_at_index

- dimension_at_index

- dimension_at_index

- dimension_at_index

- dimension_at_index

- dimension_at_index

- dimension_at_index

- dimension_at_index

- dimension_at_index

- dimension_at_index

- dimension_at_index

- dimension_at_index

- dimension_at_index

- dimension_at_index

- dimension_at_index

- dimension_at_index

- dimension_at_index

- dimension_at_index

- dimension_at_index_dyn

- dimension_value

- dimension_value

- dimension_value

- dimension_value_dyn

- disable_control_flow_v2

- disable_control_flow_v2_dyn

- disable_eager_execution

- disable_eager_execution_dyn

- disable_tensor_equality

- disable_tensor_equality_dyn

- disable_v2_behavior

- disable_v2_behavior_dyn

- disable_v2_tensorshape

- disable_v2_tensorshape_dyn

- div

- div

- div

- div

- div

- div

- div_dyn

- div_no_nan

- div_no_nan_dyn

- divide

- divide

- divide

- divide

- divide

- divide_dyn

- dynamic_partition

- dynamic_partition

- dynamic_partition_dyn

- dynamic_stitch

- dynamic_stitch

- dynamic_stitch_dyn

- edit_distance

- edit_distance

- edit_distance

- edit_distance

- edit_distance_dyn

- einsum

- einsum

- einsum_dyn

- einsum_dyn

- enable_control_flow_v2

- enable_control_flow_v2_dyn

- enable_eager_execution

- enable_eager_execution_dyn

- enable_tensor_equality

- enable_tensor_equality_dyn

- enable_v2_behavior

- enable_v2_behavior_dyn

- enable_v2_tensorshape

- enable_v2_tensorshape_dyn

- encode_base64

- encode_base64_dyn

- equal

- equal

- equal

- equal

- equal

- equal

- equal

- equal

- equal

- equal

- equal

- equal

- equal

- equal

- equal

- equal

- equal

- equal

- equal_dyn

- erf

- erf_dyn

- erfc

- erfc_dyn

- executing_eagerly

- executing_eagerly_dyn

- exp

- exp_dyn

- expand_dims

- expand_dims

- expand_dims

- expand_dims

- expand_dims

- expand_dims

- expand_dims_dyn

- expm1

- expm1_dyn

- extract_image_patches

- extract_image_patches

- extract_image_patches

- extract_image_patches

- extract_image_patches

- extract_image_patches

- extract_image_patches_dyn

- extract_volume_patches

- extract_volume_patches_dyn

- eye

- eye

- eye

- eye

- eye

- eye

- eye

- eye

- eye

- eye

- eye

- eye

- eye

- eye

- eye_dyn

- fake_quant_with_min_max_args

- fake_quant_with_min_max_args

- fake_quant_with_min_max_args

- fake_quant_with_min_max_args

- fake_quant_with_min_max_args_dyn

- fake_quant_with_min_max_args_gradient

- fake_quant_with_min_max_args_gradient

- fake_quant_with_min_max_args_gradient

- fake_quant_with_min_max_args_gradient

- fake_quant_with_min_max_args_gradient_dyn

- fake_quant_with_min_max_vars

- fake_quant_with_min_max_vars_dyn

- fake_quant_with_min_max_vars_gradient

- fake_quant_with_min_max_vars_gradient_dyn

- fake_quant_with_min_max_vars_per_channel

- fake_quant_with_min_max_vars_per_channel_dyn

- fake_quant_with_min_max_vars_per_channel_gradient

- fake_quant_with_min_max_vars_per_channel_gradient_dyn

- feature_usage_counts

- feature_usage_counts_dyn

- fertile_stats_deserialize

- fertile_stats_deserialize_dyn

- fertile_stats_is_initialized_op

- fertile_stats_is_initialized_op_dyn

- fertile_stats_resource_handle_op

- fertile_stats_resource_handle_op_dyn

- fertile_stats_serialize

- fertile_stats_serialize_dyn

- fft

- fft_dyn

- fft2d

- fft2d_dyn

- fft3d

- fft3d_dyn

- fill

- fill

- fill_dyn

- finalize_tree

- finalize_tree_dyn

- fingerprint

- fingerprint_dyn

- five_float_outputs

- five_float_outputs_dyn

- fixed_size_partitioner

- fixed_size_partitioner_dyn

- float_input

- float_input_dyn

- float_output

- float_output_dyn

- float_output_string_output

- float_output_string_output_dyn

- floor

- floor_div

- floor_div

- floor_div_dyn

- floor_dyn

- floordiv

- floordiv

- floordiv

- floordiv

- floordiv

- floordiv

- floordiv

- floordiv

- floordiv

- floordiv

- floordiv

- floordiv

- floordiv

- floordiv

- floordiv

- floordiv

- floordiv

- floordiv

- floordiv

- floordiv

- floordiv_dyn

- foldl

- foldl

- foldl

- foldl

- foldl

- foldl

- foldl

- foldl

- foldl

- foldl

- foldl

- foldl

- foldl

- foldl

- foldl

- foldl

- foldl

- foldl

- foldl

- foldl

- foldl_dyn

- foldr

- foldr

- foldr

- foldr

- foldr

- foldr

- foldr

- foldr

- foldr

- foldr_dyn

- foo1

- foo1_dyn

- foo2

- foo2_dyn

- foo3

- foo3_dyn

- func_attr

- func_attr

- func_attr

- func_attr_dyn

- func_list_attr

- func_list_attr_dyn

- function

- function

- function

- function

- function

- function_dyn

- gather

- gather

- gather

- gather

- gather

- gather

- gather

- gather

- gather

- gather

- gather

- gather

- gather_dyn

- gather_nd

- gather_nd

- gather_nd_dyn

- gather_tree

- gather_tree_dyn

- get_collection

- get_collection

- get_collection

- get_collection

- get_collection

- get_collection

- get_default_graph

- get_default_graph_dyn

- get_default_session

- get_default_session_dyn

- get_local_variable

- get_local_variable

- get_local_variable

- get_local_variable

- get_local_variable

- get_local_variable_dyn

- get_logger

- get_logger_dyn

- get_seed

- get_seed

- get_seed

- get_seed_dyn

- get_session_handle

- get_session_handle

- get_session_handle_dyn

- get_session_tensor

- get_session_tensor_dyn

- get_static_value

- get_static_value

- get_static_value

- get_static_value_dyn

- get_variable

- get_variable

- get_variable

- get_variable

- get_variable

- get_variable

- get_variable

- get_variable

- get_variable

- get_variable

- get_variable

- get_variable

- get_variable

- get_variable

- get_variable

- get_variable

- get_variable

- get_variable

- get_variable

- get_variable

- get_variable

- get_variable

- get_variable

- get_variable

- get_variable_dyn

- get_variable_scope

- get_variable_scope_dyn

- global_norm

- global_norm

- global_norm_dyn

- global_variables

- global_variables_dyn

- global_variables_initializer

- global_variables_initializer_dyn

- grad_pass_through

- grad_pass_through

- grad_pass_through_dyn

- gradient_trees_partition_examples

- gradient_trees_partition_examples_dyn

- gradient_trees_prediction

- gradient_trees_prediction

- gradient_trees_prediction_dyn

- gradient_trees_prediction_verbose

- gradient_trees_prediction_verbose

- gradient_trees_prediction_verbose_dyn

- gradients

- gradients

- gradients

- gradients

- gradients

- gradients

- gradients

- gradients

- gradients

- gradients

- gradients

- gradients

- gradients

- gradients

- gradients

- gradients

- gradients

- gradients

- gradients_dyn

- graph_def_version

- graph_def_version_dyn

- greater

- greater

- greater

- greater

- greater

- greater

- greater

- greater

- greater

- greater_dyn

- greater_equal

- greater_equal

- greater_equal

- greater_equal

- greater_equal

- greater_equal

- greater_equal

- greater_equal

- greater_equal

- greater_equal_dyn

- group

- group

- group_dyn

- group_dyn

- grow_tree_ensemble

- grow_tree_ensemble

- grow_tree_ensemble_dyn

- grow_tree_v4

- grow_tree_v4_dyn

- guarantee_const

- guarantee_const_dyn

- hard_routing_function

- hard_routing_function_dyn

- hessians

- hessians

- hessians

- hessians

- hessians_dyn

- histogram_fixed_width

- histogram_fixed_width

- histogram_fixed_width

- histogram_fixed_width

- histogram_fixed_width

- histogram_fixed_width

- histogram_fixed_width

- histogram_fixed_width

- histogram_fixed_width

- histogram_fixed_width

- histogram_fixed_width

- histogram_fixed_width

- histogram_fixed_width_bins

- histogram_fixed_width_bins

- histogram_fixed_width_bins

- histogram_fixed_width_bins

- histogram_fixed_width_bins

- histogram_fixed_width_bins

- histogram_fixed_width_bins_dyn

- histogram_fixed_width_dyn

- identity

- identity

- identity_dyn

- identity_n

- identity_n_dyn

- ifft

- ifft_dyn

- ifft2d

- ifft2d_dyn

- ifft3d

- ifft3d_dyn

- igamma

- igamma_dyn

- igammac

- igammac_dyn

- imag

- imag_dyn

- image_connected_components

- image_connected_components_dyn

- image_projective_transform

- image_projective_transform_dyn

- image_projective_transform_v2

- image_projective_transform_v2_dyn

- import_graph_def

- import_graph_def

- import_graph_def

- import_graph_def

- import_graph_def

- import_graph_def

- import_graph_def_dyn

- in_polymorphic_twice

- in_polymorphic_twice_dyn

- init_scope

- init_scope_dyn

- initialize_all_tables

- initialize_all_tables_dyn

- initialize_all_variables

- initialize_all_variables_dyn

- initialize_local_variables

- initialize_local_variables_dyn

- initialize_variables

- initialize_variables_dyn

- int_attr

- int_attr_dyn

- int_input

- int_input_dyn

- int_input_float_input

- int_input_float_input_dyn

- int_input_int_output

- int_input_int_output_dyn

- int_output

- int_output_dyn

- int_output_float_output

- int_output_float_output_dyn

- int64_output

- int64_output_dyn

- invert_permutation

- invert_permutation_dyn

- is_finite

- is_finite_dyn

- is_inf

- is_inf_dyn

- is_nan

- is_nan_dyn

- is_non_decreasing

- is_non_decreasing_dyn

- is_numeric_tensor

- is_numeric_tensor

- is_numeric_tensor_dyn

- is_strictly_increasing

- is_strictly_increasing_dyn

- is_tensor

- is_tensor

- is_tensor

- is_tensor_dyn

- is_variable_initialized

- is_variable_initialized_dyn

- k_feature_gradient

- k_feature_gradient_dyn

- k_feature_routing_function

- k_feature_routing_function_dyn

- kernel_label

- kernel_label_dyn

- kernel_label_required

- kernel_label_required_dyn

- lbeta

- lbeta

- lbeta

- lbeta

- lbeta_dyn

- less

- less

- less

- less

- less

- less

- less

- less

- less

- less_dyn

- less_equal

- less_equal

- less_equal

- less_equal

- less_equal

- less_equal

- less_equal

- less_equal

- less_equal

- less_equal_dyn

- lgamma

- lgamma_dyn

- linspace

- linspace_dyn

- list_input

- list_input_dyn

- list_output

- list_output_dyn

- load_file_system_library

- load_file_system_library_dyn

- load_library

- load_library_dyn

- load_op_library

- load_op_library

- load_op_library_dyn

- local_variables

- local_variables_dyn

- local_variables_initializer

- local_variables_initializer_dyn

- log

- log_dyn

- log_sigmoid

- log_sigmoid

- log_sigmoid

- log_sigmoid_dyn

- log1p

- log1p_dyn

- logical_and

- logical_and

- logical_and

- logical_and

- logical_and_dyn

- logical_not

- logical_not_dyn

- logical_or

- logical_or

- logical_or

- logical_or

- logical_or_dyn

- logical_xor

- logical_xor

- logical_xor_dyn

- make_ndarray

- make_ndarray_dyn

- make_quantile_summaries

- make_quantile_summaries_dyn

- make_template

- make_template_dyn

- make_tensor_proto

- make_tensor_proto

- make_tensor_proto

- make_tensor_proto

- make_tensor_proto

- make_tensor_proto

- make_tensor_proto

- make_tensor_proto

- make_tensor_proto_dyn

- map_fn

- map_fn

- map_fn

- map_fn

- map_fn

- map_fn

- map_fn

- map_fn

- map_fn

- map_fn

- map_fn

- map_fn

- map_fn

- map_fn

- map_fn

- map_fn_dyn

- masked_matmul

- masked_matmul_dyn

- matching_files

- matching_files_dyn

- matmul

- matmul

- matmul

- matmul

- matmul

- matmul

- matmul

- matmul

- matmul

- matmul

- matmul

- matmul

- matmul

- matmul

- matmul

- matmul

- matmul

- matmul

- matmul_dyn

- matrix_band_part

- matrix_band_part_dyn

- matrix_determinant

- matrix_determinant_dyn

- matrix_diag

- matrix_diag

- matrix_diag_dyn

- matrix_diag_part

- matrix_diag_part

- matrix_diag_part_dyn

- matrix_inverse

- matrix_inverse_dyn

- matrix_set_diag

- matrix_set_diag

- matrix_set_diag

- matrix_set_diag

- matrix_set_diag

- matrix_set_diag

- matrix_set_diag

- matrix_set_diag

- matrix_set_diag

- matrix_set_diag

- matrix_set_diag

- matrix_set_diag

- matrix_set_diag_dyn

- matrix_solve

- matrix_solve_dyn

- matrix_solve_ls

- matrix_solve_ls

- matrix_solve_ls

- matrix_solve_ls

- matrix_solve_ls_dyn

- matrix_square_root

- matrix_square_root_dyn

- matrix_transpose

- matrix_transpose_dyn

- matrix_triangular_solve

- matrix_triangular_solve_dyn

- max_bytes_in_use

- max_bytes_in_use_dyn

- maximum

- maximum

- maximum

- maximum

- maximum

- maximum

- maximum

- maximum

- maximum

- maximum_dyn

- meshgrid

- meshgrid

- meshgrid_dyn

- meshgrid_dyn

- min_max_variable_partitioner

- min_max_variable_partitioner

- min_max_variable_partitioner

- min_max_variable_partitioner

- min_max_variable_partitioner_dyn

- minimum

- minimum

- minimum

- minimum

- minimum

- minimum

- minimum

- minimum

- minimum

- minimum_dyn

- mixed_struct

- mixed_struct_dyn

- mod

- mod

- mod_dyn

- model_variables

- model_variables_dyn

- moving_average_variables

- moving_average_variables_dyn

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial

- multinomial_dyn

- multiply

- multiply

- multiply

- multiply

- multiply

- multiply

- multiply

- multiply

- multiply

- multiply

- multiply

- multiply

- multiply

- multiply

- multiply

- multiply

- multiply

- multiply

- multiply_dyn

- n_in_polymorphic_twice

- n_in_polymorphic_twice_dyn

- n_in_twice

- n_in_twice_dyn

- n_in_two_type_variables

- n_in_two_type_variables_dyn

- n_ints_in

- n_ints_in_dyn

- n_ints_out

- n_ints_out_default

- n_ints_out_default_dyn

- n_ints_out_dyn

- n_polymorphic_in

- n_polymorphic_in_dyn

- n_polymorphic_out

- n_polymorphic_out_default

- n_polymorphic_out_default_dyn

- n_polymorphic_out_dyn

- n_polymorphic_restrict_in

- n_polymorphic_restrict_in_dyn

- n_polymorphic_restrict_out

- n_polymorphic_restrict_out_dyn

- negative

- negative_dyn

- no_op

- no_op

- no_op_dyn

- no_regularizer

- no_regularizer_dyn

- NoGradient

- NoGradient_dyn

- nondifferentiable_batch_function

- nondifferentiable_batch_function_dyn

- none

- none_dyn

- norm

- norm

- norm

- norm

- norm

- norm

- norm

- norm

- norm

- norm

- norm

- norm

- norm_dyn

- not_equal

- not_equal

- not_equal

- not_equal

- not_equal_dyn

- numpy_function

- numpy_function

- numpy_function

- numpy_function

- numpy_function

- numpy_function

- numpy_function

- numpy_function

- numpy_function_dyn

- obtain_next

- obtain_next_dyn

- old

- old_dyn

- one_hot

- one_hot

- one_hot

- one_hot

- one_hot

- one_hot

- one_hot

- one_hot_dyn

- ones

- ones

- ones

- ones

- ones_dyn

- ones_like

- ones_like

- ones_like

- ones_like_dyn

- op_scope

- op_scope_dyn

- op_with_default_attr

- op_with_default_attr_dyn

- op_with_future_default_attr

- op_with_future_default_attr_dyn

- out_t

- out_t_dyn

- out_type_list

- out_type_list_dyn

- out_type_list_restrict

- out_type_list_restrict_dyn

- pad

- pad

- pad

- pad

- pad

- pad

- pad

- pad

- pad

- pad

- pad

- pad

- pad_dyn

- parallel_stack

- parallel_stack_dyn

- parse_example

- parse_example

- parse_example

- parse_example

- parse_example_dyn

- parse_single_example

- parse_single_example

- parse_single_example

- parse_single_example_dyn

- parse_single_sequence_example

- parse_single_sequence_example_dyn

- parse_tensor

- parse_tensor_dyn

- periodic_resample

- periodic_resample_dyn

- periodic_resample_op_grad

- periodic_resample_op_grad_dyn

- placeholder

- placeholder

- placeholder

- placeholder

- placeholder_dyn

- placeholder_with_default

- placeholder_with_default

- placeholder_with_default_dyn

- polygamma

- polygamma_dyn

- polymorphic

- polymorphic_default_out

- polymorphic_default_out_dyn

- polymorphic_dyn

- polymorphic_out

- polymorphic_out_dyn

- pow

- pow

- pow

- pow

- pow

- pow

- pow

- pow

- pow

- pow_dyn

- print_dyn

- print_dyn

- Print_dyn

- process_input_v4

- process_input_v4_dyn

- py_func

- py_func

- py_func

- py_func_dyn

- py_function

- py_function

- py_function

- py_function

- py_function

- py_function

- py_function

- py_function

- py_function_dyn

- qr

- qr_dyn

- quantile_accumulator_add_summaries

- quantile_accumulator_add_summaries_dyn

- quantile_accumulator_deserialize

- quantile_accumulator_deserialize_dyn

- quantile_accumulator_flush

- quantile_accumulator_flush_dyn

- quantile_accumulator_flush_summary

- quantile_accumulator_flush_summary_dyn

- quantile_accumulator_get_buckets

- quantile_accumulator_get_buckets_dyn

- quantile_accumulator_is_initialized

- quantile_accumulator_is_initialized_dyn

- quantile_accumulator_serialize

- quantile_accumulator_serialize_dyn

- quantile_buckets

- quantile_buckets_dyn

- quantile_stream_resource_handle_op

- quantile_stream_resource_handle_op

- quantile_stream_resource_handle_op

- quantile_stream_resource_handle_op_dyn

- quantiles

- quantiles_dyn

- quantize

- quantize_dyn

- quantize_v2

- quantize_v2_dyn

- quantized_concat

- quantized_concat_dyn

- random_crop

- random_crop

- random_crop

- random_crop

- random_crop_dyn

- random_gamma

- random_gamma

- random_gamma

- random_gamma

- random_gamma

- random_gamma

- random_gamma

- random_gamma

- random_gamma

- random_gamma

- random_gamma

- random_gamma

- random_gamma

- random_gamma

- random_gamma

- random_gamma

- random_gamma

- random_gamma

- random_gamma

- random_gamma

- random_gamma

- random_gamma

- random_gamma

- random_gamma

- random_gamma_dyn

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal

- random_normal_dyn

- random_poisson

- random_poisson

- random_poisson

- random_poisson

- random_poisson

- random_poisson

- random_poisson

- random_poisson

- random_poisson

- random_poisson

- random_poisson

- random_poisson

- random_poisson

- random_poisson

- random_poisson

- random_poisson

- random_poisson

- random_poisson

- random_poisson_dyn

- random_shuffle

- random_shuffle

- random_shuffle_dyn

- random_uniform

- random_uniform

- random_uniform

- random_uniform

- random_uniform

- random_uniform

- random_uniform

- random_uniform

- random_uniform

- random_uniform

- random_uniform

- random_uniform

- random_uniform

- random_uniform

- random_uniform

- random_uniform

- random_uniform

- random_uniform

- random_uniform

- random_uniform

- random_uniform_dyn

- range

- range

- range

- range

- range

- range

- range

- range

- range

- range

- range

- range

- range

- range

- range

- range

- range

- range

- range

- range

- range

- range

- range

- range

- range_dyn

- rank

- rank

- rank

- rank

- rank

- rank

- rank_dyn

- read_file

- read_file_dyn

- real

- real_dyn

- realdiv

- realdiv

- realdiv_dyn

- reciprocal

- reciprocal_dyn

- recompute_grad

- recompute_grad

- recompute_grad_dyn

- reduce_all

- reduce_all

- reduce_all_dyn

- reduce_any

- reduce_any

- reduce_any_dyn

- reduce_join

- reduce_join

- reduce_join

- reduce_join

- reduce_join

- reduce_join

- reduce_join_dyn

- reduce_logsumexp

- reduce_logsumexp_dyn

- reduce_max

- reduce_max

- reduce_max

- reduce_max

- reduce_max_dyn

- reduce_mean

- reduce_mean

- reduce_mean

- reduce_mean

- reduce_mean

- reduce_mean

- reduce_mean

- reduce_mean

- reduce_mean

- reduce_mean

- reduce_mean

- reduce_mean

- reduce_mean

- reduce_mean

- reduce_mean

- reduce_mean

- reduce_mean_dyn

- reduce_min

- reduce_min_dyn

- reduce_prod

- reduce_prod_dyn

- reduce_slice_max

- reduce_slice_max_dyn

- reduce_slice_min

- reduce_slice_min_dyn

- reduce_slice_prod

- reduce_slice_prod_dyn

- reduce_slice_sum

- reduce_slice_sum_dyn

- reduce_sum

- reduce_sum

- reduce_sum

- reduce_sum

- reduce_sum

- reduce_sum

- reduce_sum

- reduce_sum

- reduce_sum

- reduce_sum

- reduce_sum

- reduce_sum

- reduce_sum

- reduce_sum

- reduce_sum

- reduce_sum

- reduce_sum_dyn

- ref_in

- ref_in_dyn

- ref_input_float_input

- ref_input_float_input_dyn

- ref_input_float_input_int_output

- ref_input_float_input_int_output_dyn

- ref_input_int_input

- ref_input_int_input_dyn

- ref_out

- ref_out_dyn

- ref_output

- ref_output_dyn

- ref_output_float_output

- ref_output_float_output_dyn

- regex_replace

- regex_replace_dyn

- register_tensor_conversion_function

- register_tensor_conversion_function

- register_tensor_conversion_function_dyn

- reinterpret_string_to_float

- reinterpret_string_to_float_dyn

- remote_fused_graph_execute

- remote_fused_graph_execute_dyn

- repeat

- repeat

- repeat

- repeat_dyn

- report_uninitialized_variables

- report_uninitialized_variables_dyn

- required_space_to_batch_paddings

- required_space_to_batch_paddings

- required_space_to_batch_paddings

- required_space_to_batch_paddings

- required_space_to_batch_paddings

- required_space_to_batch_paddings

- required_space_to_batch_paddings

- required_space_to_batch_paddings

- required_space_to_batch_paddings

- required_space_to_batch_paddings

- required_space_to_batch_paddings

- required_space_to_batch_paddings

- required_space_to_batch_paddings

- required_space_to_batch_paddings

- required_space_to_batch_paddings

- required_space_to_batch_paddings

- required_space_to_batch_paddings

- required_space_to_batch_paddings

- required_space_to_batch_paddings_dyn

- requires_older_graph_version

- requires_older_graph_version_dyn

- resampler

- resampler_dyn

- resampler_grad

- resampler_grad_dyn

- reserved_attr

- reserved_attr_dyn

- reserved_input

- reserved_input_dyn

- reset_default_graph

- reset_default_graph_dyn

- reshape

- reshape

- reshape

- reshape

- reshape

- reshape_dyn

- reshape<T>

- reshape<T>

- resource_create_op

- resource_create_op_dyn

- resource_initialized_op

- resource_initialized_op_dyn

- resource_using_op

- resource_using_op_dyn

- restrict

- restrict_dyn

- reverse

- reverse_dyn

- reverse_sequence

- reverse_sequence

- reverse_sequence

- reverse_sequence

- reverse_sequence

- reverse_sequence

- reverse_sequence_dyn

- rint

- rint_dyn

- roll

- roll

- roll

- roll

- roll

- roll

- roll

- roll

- roll

- roll

- roll

- roll

- roll

- roll

- roll

- roll

- roll

- roll

- roll

- roll

- roll

- roll

- roll

- roll

- roll

- roll

- roll

- roll_dyn

- round

- round_dyn

- routing_function

- routing_function_dyn

- routing_gradient

- routing_gradient_dyn

- rpc

- rpc_dyn

- rsqrt

- rsqrt_dyn

- saturate_cast

- saturate_cast

- saturate_cast_dyn

- scalar_mul

- scalar_mul

- scalar_mul

- scalar_mul

- scalar_mul

- scalar_mul

- scalar_mul

- scalar_mul

- scalar_mul

- scalar_mul

- scalar_mul

- scalar_mul

- scalar_mul_dyn

- scan

- scan

- scan

- scan

- scan

- scan

- scan

- scan

- scan

- scan

- scan

- scan

- scan

- scan

- scan

- scan

- scan

- scan

- scan

- scan

- scan_dyn

- scatter_add

- scatter_add

- scatter_add

- scatter_add_dyn

- scatter_add_ndim

- scatter_add_ndim_dyn

- scatter_div

- scatter_div_dyn

- scatter_max

- scatter_max_dyn

- scatter_min

- scatter_min_dyn

- scatter_mul

- scatter_mul_dyn

- scatter_nd

- scatter_nd_add

- scatter_nd_add_dyn

- scatter_nd_dyn

- scatter_nd_sub

- scatter_nd_sub_dyn

- scatter_nd_update

- scatter_nd_update_dyn

- scatter_sub

- scatter_sub_dyn

- scatter_update

- scatter_update

- scatter_update_dyn

- searchsorted

- searchsorted

- searchsorted

- searchsorted

- searchsorted_dyn

- segment_max

- segment_max_dyn

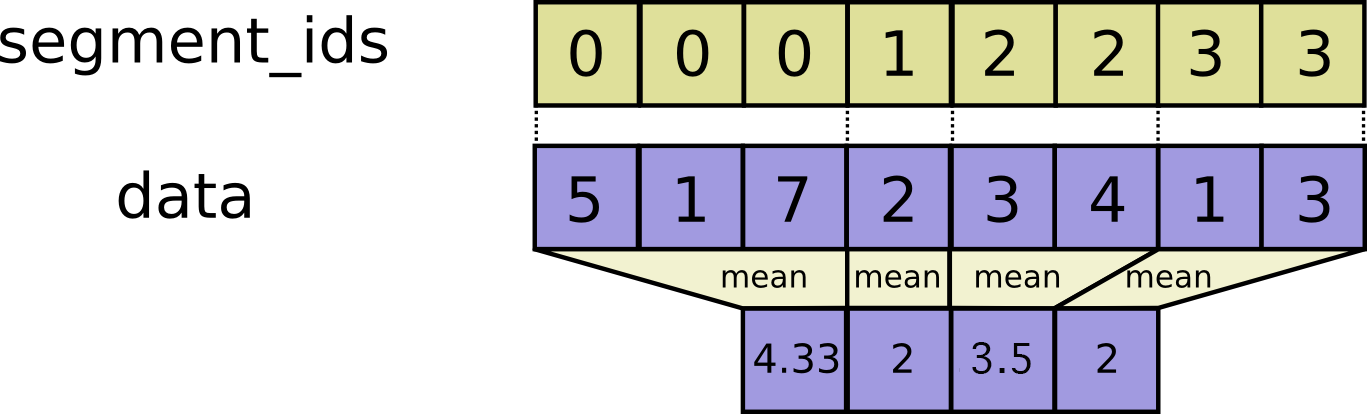

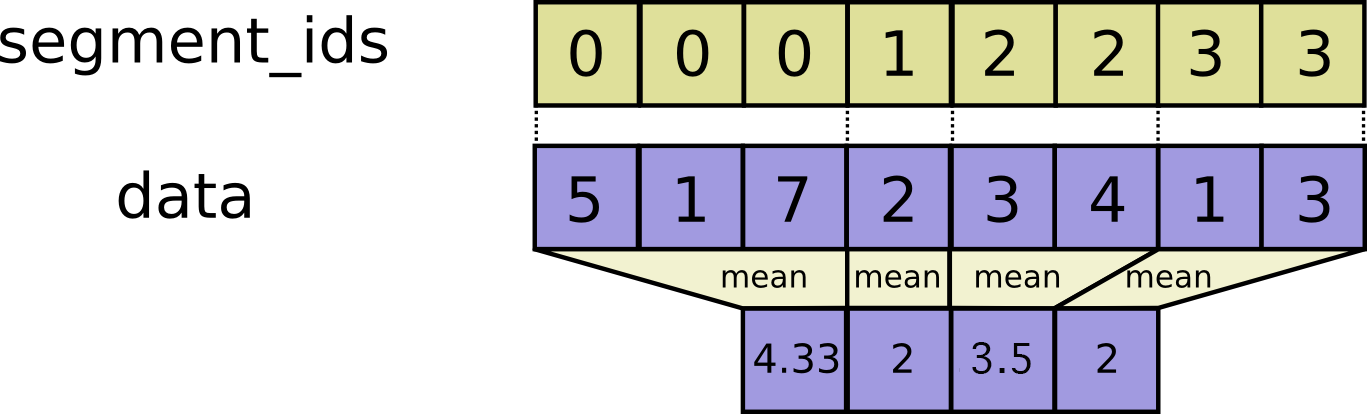

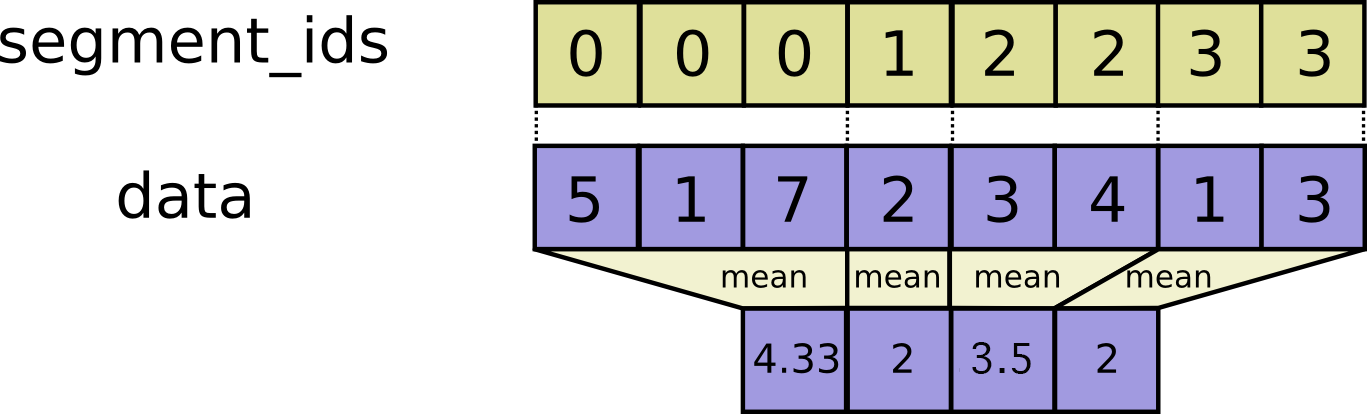

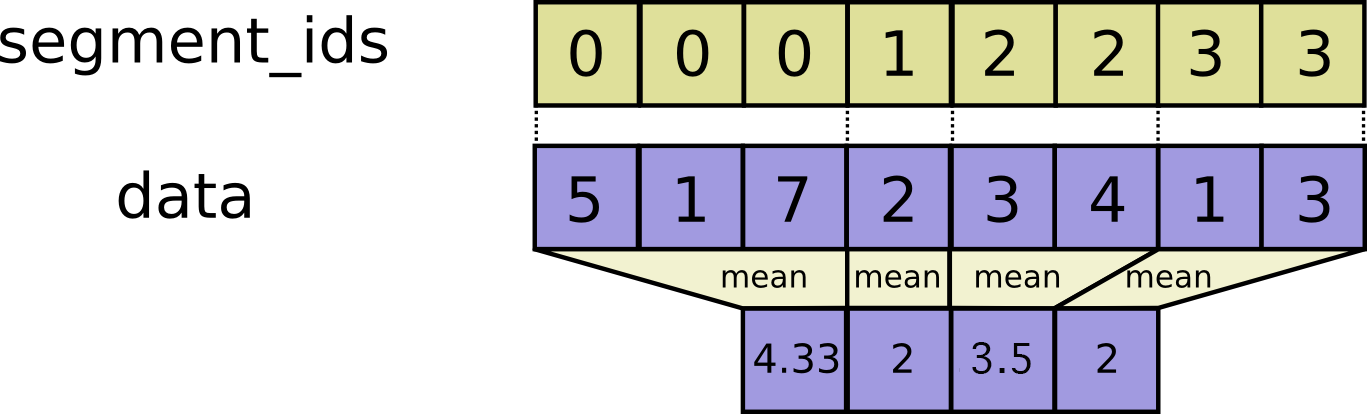

- segment_mean

- segment_mean_dyn

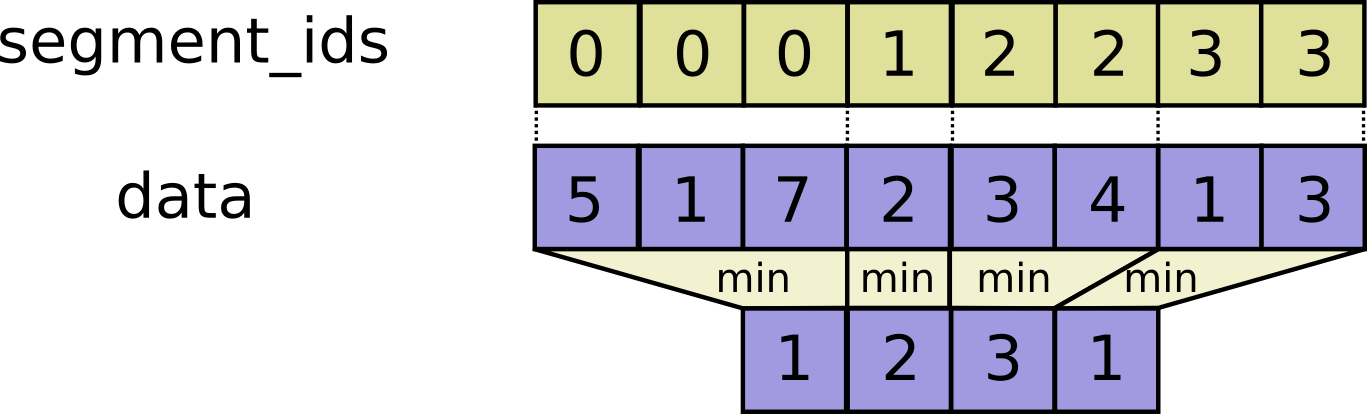

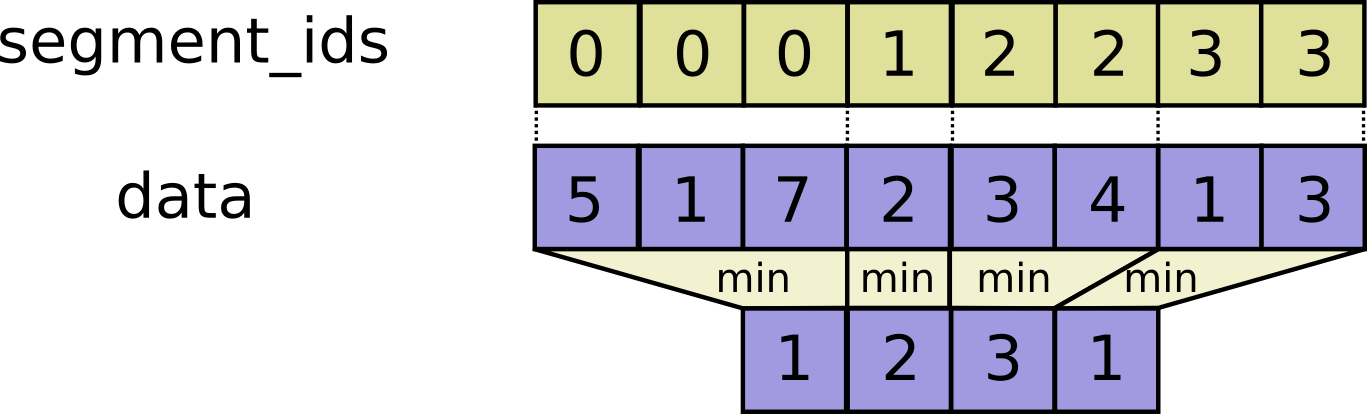

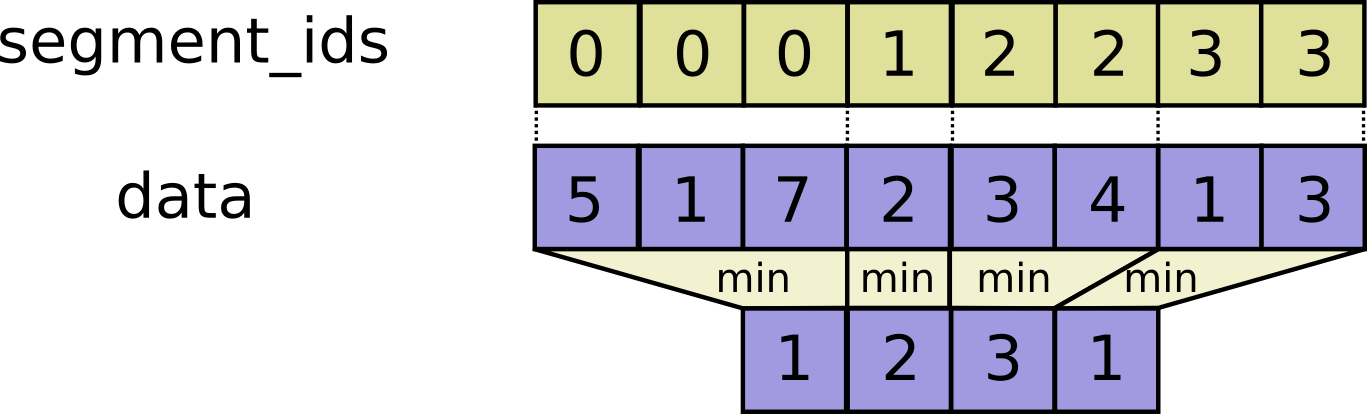

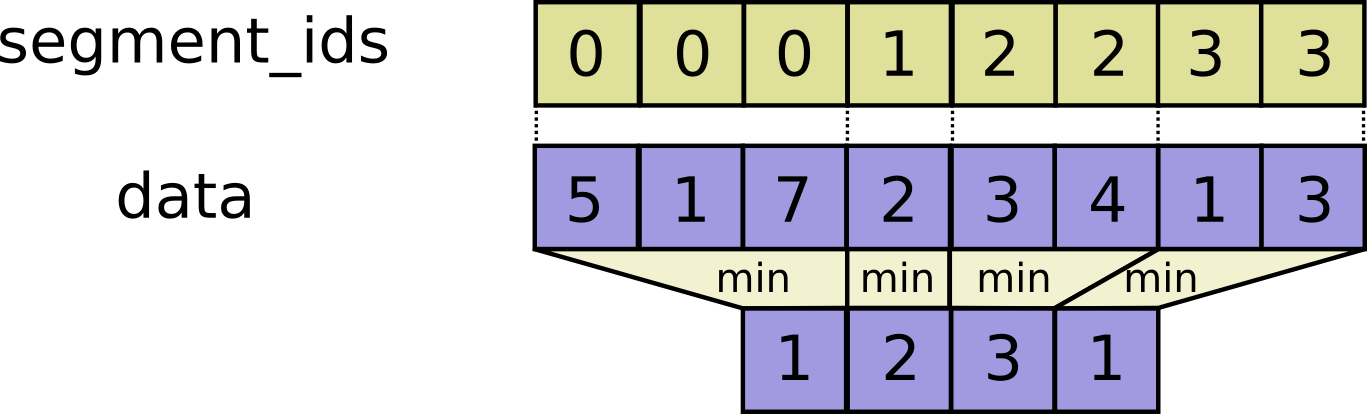

- segment_min

- segment_min_dyn

- segment_prod

- segment_prod_dyn

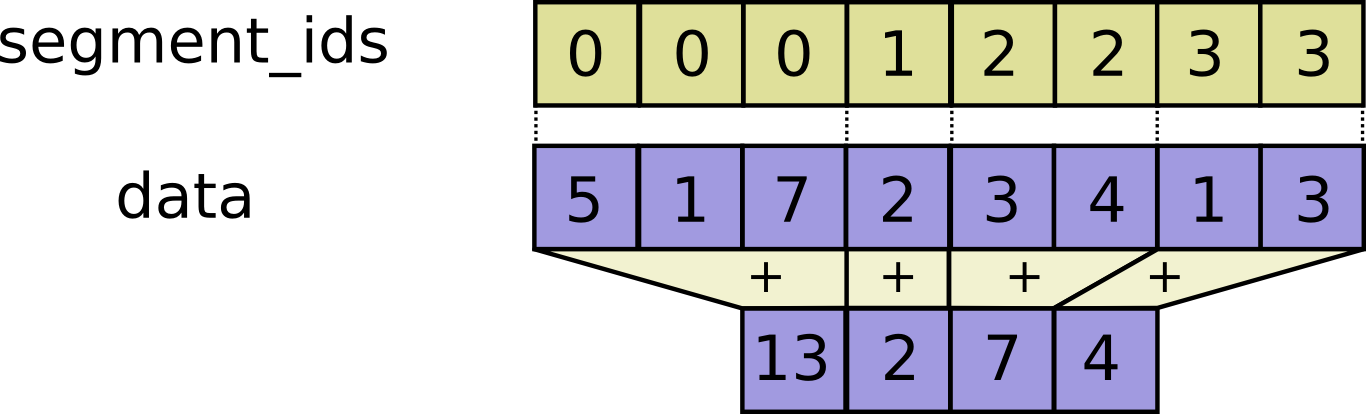

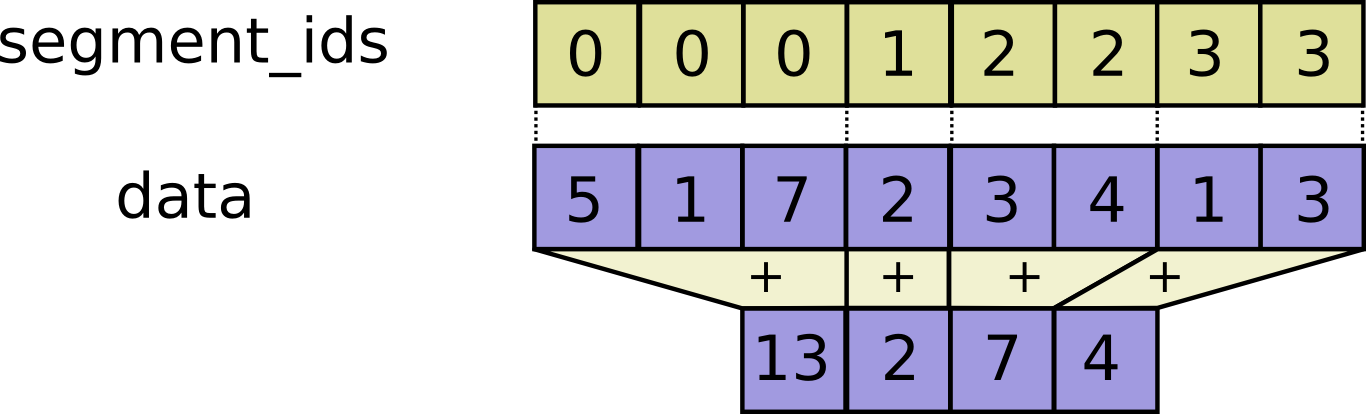

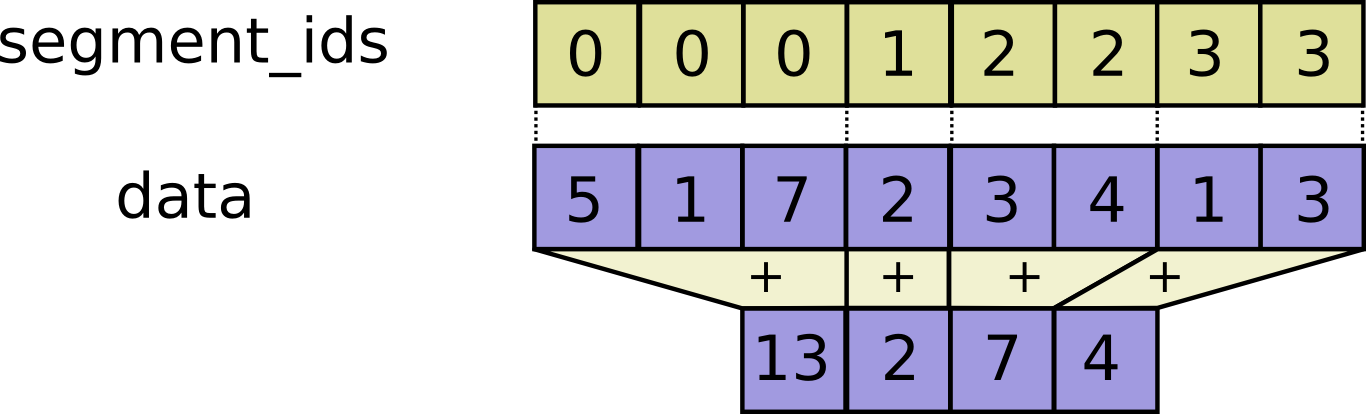

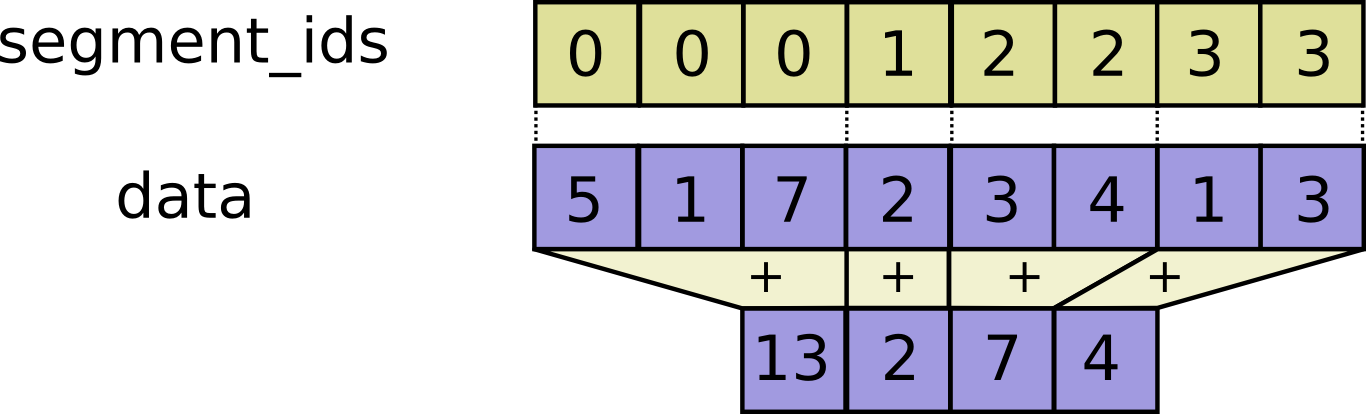

- segment_sum

- segment_sum

- segment_sum_dyn

- self_adjoint_eig

- self_adjoint_eig_dyn

- self_adjoint_eigvals

- self_adjoint_eigvals_dyn

- sequence_file_dataset

- sequence_file_dataset_dyn

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask

- sequence_mask_dyn

- serialize_many_sparse

- serialize_many_sparse_dyn

- serialize_sparse

- serialize_sparse

- serialize_sparse_dyn

- serialize_tensor

- serialize_tensor_dyn

- set_random_seed

- set_random_seed_dyn

- setdiff1d

- setdiff1d

- setdiff1d

- setdiff1d

- setdiff1d_dyn

- shape

- shape

- shape

- shape

- shape

- shape

- shape_dyn

- shape_n

- shape_n_dyn

- sigmoid

- sigmoid_dyn

- sign

- sign_dyn

- simple

- simple_dyn

- simple_struct

- simple_struct_dyn

- sin

- sin_dyn

- single_image_random_dot_stereograms

- single_image_random_dot_stereograms_dyn

- sinh

- sinh_dyn

- size

- size

- size

- size

- size

- size

- size_dyn

- skip_gram_generate_candidates

- skip_gram_generate_candidates_dyn

- slice

- slice

- slice

- slice

- slice

- slice

- slice

- slice

- slice

- slice

- slice

- slice

- slice_dyn

- sort

- sort_dyn

- space_to_batch

- space_to_batch_dyn

- space_to_batch_nd

- space_to_batch_nd_dyn

- space_to_depth

- space_to_depth

- space_to_depth

- space_to_depth

- space_to_depth_dyn

- sparse_add

- sparse_add

- sparse_add

- sparse_add

- sparse_add_dyn

- sparse_concat

- sparse_concat

- sparse_concat_dyn

- sparse_feature_cross

- sparse_feature_cross

- sparse_feature_cross_dyn

- sparse_feature_cross_v2

- sparse_feature_cross_v2

- sparse_feature_cross_v2_dyn

- sparse_fill_empty_rows

- sparse_fill_empty_rows

- sparse_fill_empty_rows

- sparse_fill_empty_rows

- sparse_fill_empty_rows

- sparse_fill_empty_rows

- sparse_fill_empty_rows

- sparse_fill_empty_rows

- sparse_fill_empty_rows_dyn

- sparse_mask

- sparse_mask_dyn

- sparse_matmul

- sparse_matmul_dyn

- sparse_maximum

- sparse_maximum_dyn

- sparse_merge

- sparse_merge

- sparse_merge

- sparse_merge

- sparse_merge

- sparse_merge

- sparse_merge

- sparse_merge

- sparse_merge

- sparse_merge

- sparse_merge

- sparse_merge

- sparse_merge

- sparse_merge

- sparse_merge

- sparse_merge

- sparse_merge

- sparse_merge

- sparse_merge_dyn

- sparse_minimum

- sparse_minimum_dyn

- sparse_placeholder

- sparse_placeholder

- sparse_placeholder

- sparse_placeholder

- sparse_placeholder

- sparse_placeholder

- sparse_placeholder

- sparse_placeholder

- sparse_placeholder

- sparse_placeholder

- sparse_placeholder

- sparse_placeholder

- sparse_placeholder_dyn

- sparse_reduce_max

- sparse_reduce_max

- sparse_reduce_max_dyn

- sparse_reduce_max_sparse

- sparse_reduce_max_sparse

- sparse_reduce_max_sparse_dyn

- sparse_reduce_sum

- sparse_reduce_sum

- sparse_reduce_sum_dyn

- sparse_reduce_sum_sparse

- sparse_reduce_sum_sparse

- sparse_reduce_sum_sparse_dyn

- sparse_reorder

- sparse_reorder

- sparse_reorder_dyn

- sparse_reset_shape

- sparse_reset_shape

- sparse_reset_shape

- sparse_reset_shape

- sparse_reset_shape_dyn

- sparse_reshape

- sparse_reshape

- sparse_reshape

- sparse_reshape

- sparse_reshape

- sparse_reshape

- sparse_reshape

- sparse_reshape

- sparse_reshape

- sparse_reshape

- sparse_reshape

- sparse_reshape

- sparse_reshape

- sparse_reshape

- sparse_reshape

- sparse_reshape

- sparse_reshape_dyn

- sparse_retain

- sparse_retain

- sparse_retain

- sparse_retain

- sparse_retain_dyn

- sparse_segment_mean

- sparse_segment_mean

- sparse_segment_mean

- sparse_segment_mean

- sparse_segment_mean

- sparse_segment_mean

- sparse_segment_mean

- sparse_segment_mean

- sparse_segment_mean

- sparse_segment_mean

- sparse_segment_mean_dyn

- sparse_segment_sqrt_n

- sparse_segment_sqrt_n

- sparse_segment_sqrt_n

- sparse_segment_sqrt_n

- sparse_segment_sqrt_n

- sparse_segment_sqrt_n

- sparse_segment_sqrt_n

- sparse_segment_sqrt_n

- sparse_segment_sqrt_n

- sparse_segment_sqrt_n

- sparse_segment_sqrt_n_dyn

- sparse_segment_sum

- sparse_segment_sum

- sparse_segment_sum

- sparse_segment_sum

- sparse_segment_sum

- sparse_segment_sum

- sparse_segment_sum

- sparse_segment_sum

- sparse_segment_sum

- sparse_segment_sum

- sparse_segment_sum_dyn

- sparse_slice

- sparse_slice

- sparse_slice_dyn

- sparse_softmax

- sparse_softmax_dyn

- sparse_split

- sparse_split

- sparse_split

- sparse_split

- sparse_split

- sparse_split

- sparse_split_dyn

- sparse_tensor_dense_matmul

- sparse_tensor_dense_matmul

- sparse_tensor_dense_matmul

- sparse_tensor_dense_matmul

- sparse_tensor_dense_matmul

- sparse_tensor_dense_matmul

- sparse_tensor_dense_matmul

- sparse_tensor_dense_matmul

- sparse_tensor_dense_matmul

- sparse_tensor_dense_matmul

- sparse_tensor_dense_matmul

- sparse_tensor_dense_matmul

- sparse_tensor_dense_matmul

- sparse_tensor_dense_matmul

- sparse_tensor_dense_matmul

- sparse_tensor_dense_matmul

- sparse_tensor_dense_matmul_dyn

- sparse_tensor_to_dense

- sparse_tensor_to_dense

- sparse_tensor_to_dense

- sparse_tensor_to_dense

- sparse_tensor_to_dense

- sparse_tensor_to_dense

- sparse_tensor_to_dense

- sparse_tensor_to_dense

- sparse_tensor_to_dense

- sparse_tensor_to_dense

- sparse_tensor_to_dense

- sparse_tensor_to_dense

- sparse_tensor_to_dense_dyn

- sparse_to_dense

- sparse_to_dense

- sparse_to_dense

- sparse_to_dense

- sparse_to_dense

- sparse_to_dense

- sparse_to_dense

- sparse_to_dense

- sparse_to_dense

- sparse_to_dense

- sparse_to_dense

- sparse_to_dense

- sparse_to_dense

- sparse_to_dense

- sparse_to_dense

- sparse_to_dense

- sparse_to_dense_dyn

- sparse_to_indicator

- sparse_to_indicator_dyn

- sparse_transpose

- sparse_transpose_dyn

- split

- split

- split

- split

- split

- split

- split

- split

- split

- split

- split

- split

- split_dyn

- sqrt

- sqrt_dyn

- square

- square_dyn

- squared_difference

- squared_difference

- squared_difference

- squared_difference

- squared_difference

- squared_difference

- squared_difference

- squared_difference

- squared_difference

- squared_difference_dyn

- squeeze

- squeeze

- squeeze

- squeeze_dyn

- stack

- stack

- stack_dyn

- stats_accumulator_scalar_add

- stats_accumulator_scalar_add_dyn

- stats_accumulator_scalar_deserialize

- stats_accumulator_scalar_deserialize_dyn

- stats_accumulator_scalar_flush

- stats_accumulator_scalar_flush_dyn

- stats_accumulator_scalar_is_initialized

- stats_accumulator_scalar_is_initialized_dyn

- stats_accumulator_scalar_make_summary

- stats_accumulator_scalar_make_summary_dyn

- stats_accumulator_scalar_resource_handle_op

- stats_accumulator_scalar_resource_handle_op

- stats_accumulator_scalar_resource_handle_op

- stats_accumulator_scalar_resource_handle_op_dyn

- stats_accumulator_scalar_serialize

- stats_accumulator_scalar_serialize_dyn

- stats_accumulator_tensor_add

- stats_accumulator_tensor_add_dyn

- stats_accumulator_tensor_deserialize

- stats_accumulator_tensor_deserialize_dyn

- stats_accumulator_tensor_flush

- stats_accumulator_tensor_flush_dyn

- stats_accumulator_tensor_is_initialized

- stats_accumulator_tensor_is_initialized_dyn

- stats_accumulator_tensor_make_summary

- stats_accumulator_tensor_make_summary_dyn

- stats_accumulator_tensor_resource_handle_op

- stats_accumulator_tensor_resource_handle_op

- stats_accumulator_tensor_resource_handle_op

- stats_accumulator_tensor_resource_handle_op_dyn

- stats_accumulator_tensor_serialize

- stats_accumulator_tensor_serialize_dyn

- stochastic_hard_routing_function

- stochastic_hard_routing_function_dyn

- stochastic_hard_routing_gradient

- stochastic_hard_routing_gradient_dyn

- stop_gradient

- stop_gradient_dyn

- strided_slice

- strided_slice

- strided_slice

- strided_slice

- strided_slice

- strided_slice

- strided_slice

- strided_slice

- strided_slice

- strided_slice

- strided_slice

- strided_slice

- strided_slice

- strided_slice

- strided_slice

- strided_slice

- strided_slice_dyn

- string_join

- string_join_dyn

- string_list_attr

- string_list_attr_dyn

- string_split

- string_split_dyn

- string_strip

- string_strip_dyn

- string_to_hash_bucket

- string_to_hash_bucket_dyn

- string_to_hash_bucket_fast

- string_to_hash_bucket_fast_dyn

- string_to_hash_bucket_strong

- string_to_hash_bucket_strong_dyn

- string_to_number

- string_to_number

- string_to_number

- string_to_number_dyn

- stub_resource_handle_op

- stub_resource_handle_op_dyn

- substr

- substr_dyn

- subtract

- subtract_dyn

- svd

- svd

- svd_dyn

- switch_case

- switch_case

- switch_case

- switch_case

- switch_case_dyn

- tables_initializer

- tables_initializer_dyn

- tan

- tan_dyn

- tanh

- tanh_dyn

- tensor_scatter_add

- tensor_scatter_add_dyn

- tensor_scatter_sub

- tensor_scatter_sub_dyn

- tensor_scatter_update

- tensor_scatter_update_dyn

- tensordot

- tensordot

- tensordot

- tensordot

- tensordot

- tensordot

- tensordot

- tensordot

- tensordot

- tensordot

- tensordot

- tensordot

- tensordot

- tensordot

- tensordot

- tensordot

- tensordot

- tensordot

- tensordot

- tensordot

- tensordot

- tensordot

- tensordot

- tensordot

- tensordot_dyn

- test_attr

- test_attr_dyn

- test_string_output

- test_string_output_dyn

- tile

- tile

- tile_dyn

- timestamp

- timestamp_dyn

- to_bfloat16

- to_bfloat16_dyn

- to_complex128

- to_complex128_dyn

- to_complex64

- to_complex64_dyn

- to_double

- to_double_dyn

- to_float

- to_float_dyn

- to_int32

- to_int32_dyn

- to_int64

- to_int64_dyn

- trace

- trace

- trace

- trace_dyn

- trainable_variables

- trainable_variables_dyn

- transpose

- transpose

- transpose

- transpose

- transpose

- transpose

- transpose_dyn

- traverse_tree_v4

- traverse_tree_v4_dyn

- tree_deserialize

- tree_deserialize_dyn

- tree_ensemble_deserialize

- tree_ensemble_deserialize_dyn

- tree_ensemble_is_initialized_op

- tree_ensemble_is_initialized_op_dyn

- tree_ensemble_serialize

- tree_ensemble_serialize_dyn

- tree_ensemble_stamp_token

- tree_ensemble_stamp_token_dyn

- tree_ensemble_stats

- tree_ensemble_stats_dyn

- tree_ensemble_used_handlers

- tree_ensemble_used_handlers_dyn

- tree_is_initialized_op

- tree_is_initialized_op_dyn

- tree_predictions_v4

- tree_predictions_v4_dyn

- tree_serialize

- tree_serialize_dyn

- tree_size

- tree_size_dyn

- truediv

- truediv

- truediv

- truediv_dyn

- truncated_normal

- truncated_normal

- truncated_normal

- truncated_normal

- truncated_normal

- truncated_normal

- truncated_normal

- truncated_normal

- truncated_normal

- truncated_normal

- truncated_normal

- truncated_normal

- truncated_normal

- truncated_normal

- truncated_normal

- truncated_normal

- truncated_normal_dyn

- truncatediv

- truncatediv

- truncatediv

- truncatediv

- truncatediv

- truncatediv

- truncatediv

- truncatediv

- truncatediv

- truncatediv_dyn

- truncatemod

- truncatemod

- truncatemod

- truncatemod

- truncatemod

- truncatemod

- truncatemod

- truncatemod

- truncatemod

- truncatemod_dyn

- try_rpc

- try_rpc_dyn

- tuple

- tuple

- tuple

- tuple_dyn

- two_float_inputs

- two_float_inputs_dyn

- two_float_inputs_float_output

- two_float_inputs_float_output_dyn

- two_float_inputs_int_output

- two_float_inputs_int_output_dyn

- two_float_outputs

- two_float_outputs_dyn

- two_int_inputs

- two_int_inputs_dyn

- two_int_outputs

- two_int_outputs_dyn

- two_refs_in

- two_refs_in_dyn

- type_list

- type_list_dyn

- type_list_restrict

- type_list_restrict_dyn

- type_list_twice

- type_list_twice_dyn

- unary

- unary_dyn

- unique

- unique

- unique_dyn

- unique_with_counts

- unique_with_counts

- unique_with_counts_dyn

- unpack_path

- unpack_path_dyn

- unravel_index

- unravel_index_dyn

- unsorted_segment_max

- unsorted_segment_max_dyn

- unsorted_segment_mean

- unsorted_segment_mean_dyn

- unsorted_segment_min

- unsorted_segment_min_dyn

- unsorted_segment_prod

- unsorted_segment_prod_dyn

- unsorted_segment_sqrt_n

- unsorted_segment_sqrt_n_dyn

- unsorted_segment_sum

- unsorted_segment_sum_dyn

- unstack

- unstack

- unstack

- unstack

- unstack

- unstack

- unstack_dyn

- update_model_v4

- update_model_v4_dyn

- variable_axis_size_partitioner

- variable_axis_size_partitioner_dyn

- variable_op_scope

- variable_op_scope_dyn

- variables_initializer

- variables_initializer

- variables_initializer_dyn

- vectorized_map

- vectorized_map

- vectorized_map_dyn

- verify_tensor_all_finite

- verify_tensor_all_finite

- verify_tensor_all_finite

- verify_tensor_all_finite_dyn

- wals_compute_partial_lhs_and_rhs

- wals_compute_partial_lhs_and_rhs_dyn

- where

- where

- where

- where

- where

- where

- where

- where

- where

- where

- where

- where

- where

- where

- where

- where

- where

- where

- where_dyn

- where_v2

- where_v2

- where_v2

- where_v2

- where_v2

- where_v2

- where_v2_dyn

- while_loop

- while_loop_dyn

- wrap_function

- wrap_function

- write_file

- write_file_dyn

- xla_broadcast_helper

- xla_broadcast_helper_dyn

- xla_cluster_output

- xla_cluster_output_dyn

- xla_conv

- xla_conv_dyn

- xla_dequantize

- xla_dequantize_dyn

- xla_dot

- xla_dot_dyn

- xla_dynamic_slice

- xla_dynamic_slice_dyn

- xla_dynamic_update_slice

- xla_dynamic_update_slice_dyn

- xla_einsum

- xla_einsum_dyn

- xla_if

- xla_if_dyn

- xla_key_value_sort

- xla_key_value_sort_dyn

- xla_launch

- xla_launch_dyn

- xla_pad

- xla_pad_dyn

- xla_recv

- xla_recv_dyn

- xla_reduce

- xla_reduce

- xla_reduce

- xla_reduce_dyn

- xla_reduce_window

- xla_reduce_window_dyn

- xla_replica_id

- xla_replica_id_dyn

- xla_select_and_scatter

- xla_select_and_scatter

- xla_select_and_scatter

- xla_select_and_scatter

- xla_select_and_scatter

- xla_select_and_scatter

- xla_select_and_scatter

- xla_select_and_scatter

- xla_select_and_scatter

- xla_select_and_scatter_dyn

- xla_self_adjoint_eig

- xla_self_adjoint_eig_dyn

- xla_send

- xla_send_dyn

- xla_sort

- xla_sort_dyn

- xla_svd

- xla_svd_dyn

- xla_while

- xla_while

- xla_while

- xla_while

- xla_while

- xla_while

- xla_while

- xla_while

- xla_while

- xla_while_dyn

- zero_initializer

- zero_initializer_dyn

- zero_var_initializer

- zero_var_initializer_dyn

- zeros

- zeros

- zeros

- zeros

- zeros_dyn

- zeros_like

- zeros_like

- zeros_like

- zeros_like

- zeros_like_dyn

- zeta

- zeta_dyn

Properties

- a_fn

- abs_fn

- accumulate_n_fn

- acos_fn

- acosh_fn

- add_check_numerics_ops_fn

- add_fn

- add_n_fn

- add_to_collection_fn

- add_to_collections_fn

- adjust_hsv_in_yiq_fn

- all_variables_fn

- angle_fn

- arg_max_fn

- arg_min_fn

- argmax_fn

- argmin_fn

- argsort_fn

- as_dtype_fn

- as_string_fn

- asin_fn

- asinh_fn

- assert_equal_fn

- Assert_fn

- assert_greater_equal_fn

- assert_greater_fn

- assert_integer_fn

- assert_less_equal_fn

- assert_less_fn

- assert_near_fn

- assert_negative_fn

- assert_non_negative_fn

- assert_non_positive_fn

- assert_none_equal_fn

- assert_positive_fn

- assert_proper_iterable_fn

- assert_rank_at_least_fn

- assert_rank_fn

- assert_rank_in_fn

- assert_same_float_dtype_fn

- assert_scalar_fn

- assert_type_fn

- assert_variables_initialized_fn

- assign_add_fn

- assign_fn

- assign_sub_fn

- atan_fn

- atan2_fn

- atanh_fn

- attr_bool_fn

- attr_bool_list_fn

- attr_default_fn

- attr_empty_list_default_fn

- attr_enum_fn

- attr_enum_list_fn

- attr_float_fn

- attr_fn

- attr_list_default_fn

- attr_list_min_fn

- attr_list_type_default_fn

- attr_min_fn

- attr_partial_shape_fn

- attr_partial_shape_list_fn

- attr_shape_fn

- attr_shape_list_fn

- attr_type_default_fn

- audio_microfrontend_fn

- b_fn

- batch_gather_fn

- batch_scatter_update_fn

- batch_to_space_fn

- batch_to_space_nd_fn

- betainc_fn

- bfloat16

- binary_fn

- bincount_fn_

- bipartite_match_fn

- bitcast_fn

- bool

- boolean_mask_fn

- broadcast_dynamic_shape_fn

- broadcast_static_shape_fn

- broadcast_to_fn

- bucketize_with_input_boundaries_fn

- build_categorical_equality_splits_fn

- build_dense_inequality_splits_fn

- build_sparse_inequality_splits_fn

- bytes_in_use_fn

- bytes_limit_fn

- case_fn

- cast_fn

- ceil_fn

- center_tree_ensemble_bias_fn

- check_numerics_fn

- cholesky_fn

- cholesky_solve_fn

- clip_by_average_norm_fn

- clip_by_global_norm_fn

- clip_by_norm_fn

- clip_by_value_fn

- complex_fn

- complex_struct_fn

- complex128

- complex64

- concat_fn

- cond_fn

- confusion_matrix_fn_

- conj_fn

- constant_fn_

- container_fn

- contrib

- contrib_dyn

- control_dependencies_fn

- control_flow_v2_enabled_fn

- convert_to_tensor_fn

- convert_to_tensor_or_indexed_slices_fn

- convert_to_tensor_or_sparse_tensor_fn

- copy_op_fn

- cos_fn

- cosh_fn

- count_nonzero_fn

- count_up_to_fn

- create_fertile_stats_variable_fn

- create_partitioned_variables_fn

- create_quantile_accumulator_fn

- create_stats_accumulator_scalar_fn

- create_stats_accumulator_tensor_fn

- create_tree_ensemble_variable_fn

- create_tree_variable_fn

- cross_fn

- cumprod_fn

- cumsum_fn

- custom_gradient_fn

- decision_tree_ensemble_resource_handle_op_fn

- decision_tree_resource_handle_op_fn

- decode_base64_fn

- decode_compressed_fn

- decode_csv_fn

- decode_json_example_fn

- decode_libsvm_fn

- decode_raw_fn_

- default_attrs_fn

- delete_session_tensor_fn

- depth_to_space_fn

- dequantize_fn

- deserialize_many_sparse_fn

- device_fn

- device_placement_op_fn

- diag_fn

- diag_part_fn

- digamma_fn

- dimension_at_index_fn

- dimension_value_fn

- disable_control_flow_v2_fn

- disable_eager_execution_fn

- disable_tensor_equality_fn

- disable_v2_behavior_fn

- disable_v2_tensorshape_fn

- div_fn

- div_no_nan_fn

- divide_fn

- dynamic_partition_fn

- dynamic_stitch_fn

- edit_distance_fn

- einsum_fn

- enable_control_flow_v2_fn

- enable_eager_execution_fn

- enable_tensor_equality_fn

- enable_v2_behavior_fn

- enable_v2_tensorshape_fn

- encode_base64_fn

- equal_fn

- erf_fn

- erfc_fn

- executing_eagerly_fn

- exp_fn

- expand_dims_fn

- expm1_fn

- extract_image_patches_fn

- extract_volume_patches_fn

- eye_fn

- fake_quant_with_min_max_args_fn

- fake_quant_with_min_max_args_gradient_fn

- fake_quant_with_min_max_vars_fn

- fake_quant_with_min_max_vars_gradient_fn

- fake_quant_with_min_max_vars_per_channel_fn

- fake_quant_with_min_max_vars_per_channel_gradient_fn

- feature_usage_counts_fn

- fertile_stats_deserialize_fn

- fertile_stats_is_initialized_op_fn

- fertile_stats_resource_handle_op_fn

- fertile_stats_serialize_fn

- fft_fn

- fft2d_fn

- fft3d_fn

- fill_fn

- finalize_tree_fn

- fingerprint_fn

- five_float_outputs_fn

- fixed_size_partitioner_fn

- float_input_fn

- float_output_fn

- float_output_string_output_fn

- float16

- float32

- float64

- floor_div_fn

- floor_fn

- floordiv_fn

- foldl_fn

- foldr_fn

- foo1_fn

- foo2_fn

- foo3_fn

- func_attr_fn

- func_list_attr_fn

- function_fn

- gather_fn

- gather_nd_fn

- gather_tree_fn

- get_collection_fn

- get_collection_ref_fn

- get_default_graph_fn

- get_default_session__fn

- get_local_variable_fn

- get_logger_fn

- get_seed_fn

- get_session_handle_fn

- get_session_tensor_fn

- get_static_value_fn

- get_variable_fn

- get_variable_scope_fn

- global_norm_fn

- global_variables_fn

- global_variables_initializer_fn

- grad_pass_through_fn

- gradient_trees_partition_examples_fn

- gradient_trees_prediction_fn

- gradient_trees_prediction_verbose_fn

- gradients_fn

- graph_def_version_fn

- greater_equal_fn

- greater_fn

- group_fn

- grow_tree_ensemble_fn

- grow_tree_v4_fn

- guarantee_const_fn

- hard_routing_function_fn

- hessians_fn

- histogram_fixed_width_bins_fn

- histogram_fixed_width_fn

- identity_fn

- identity_n_fn

- ifft_fn

- ifft2d_fn

- ifft3d_fn

- igamma_fn

- igammac_fn

- imag_fn

- image_connected_components_fn

- image_projective_transform_fn

- image_projective_transform_v2_fn

- import_graph_def_fn

- in_polymorphic_twice_fn

- init_scope_fn

- initialize_all_tables_fn

- initialize_all_variables_fn

- initialize_local_variables_fn

- initialize_variables_fn

- int_attr_fn

- int_input_float_input_fn

- int_input_fn

- int_input_int_output_fn

- int_output_float_output_fn

- int_output_fn

- int16

- int32

- int64

- int64_output_fn

- int8

- invert_permutation_fn

- is_finite_fn

- is_inf_fn

- is_nan_fn

- is_non_decreasing_fn

- is_numeric_tensor_fn

- is_strictly_increasing_fn

- is_tensor_fn

- is_variable_initialized_fn

- k_feature_gradient_fn

- k_feature_routing_function_fn

- kernel_label_fn

- kernel_label_required_fn

- lbeta_fn

- less_equal_fn

- less_fn

- lgamma_fn

- linspace_fn

- list_input_fn

- list_output_fn

- load_file_system_library_fn

- load_library_fn

- load_op_library_fn

- local_variables_fn

- local_variables_initializer_fn

- log_fn

- log_sigmoid_fn

- log1p_fn

- logical_and_fn

- logical_not_fn

- logical_or_fn

- logical_xor_fn

- make_ndarray_fn

- make_quantile_summaries_fn

- make_template_fn

- make_tensor_proto_fn

- map_fn_fn

- masked_matmul_fn

- matching_files_fn

- matmul_fn

- matrix_band_part_fn

- matrix_determinant_fn

- matrix_diag_fn

- matrix_diag_part_fn

- matrix_inverse_fn

- matrix_set_diag_fn

- matrix_solve_fn

- matrix_solve_ls_fn

- matrix_square_root_fn

- matrix_transpose_fn

- matrix_triangular_solve_fn

- max_bytes_in_use_fn

- maximum_fn

- meshgrid_fn

- min_max_variable_partitioner_fn

- minimum_fn

- mixed_struct_fn

- mod_fn

- model_variables_fn

- moving_average_variables_fn

- multinomial_fn

- multiply_fn

- n_in_polymorphic_twice_fn

- n_in_twice_fn

- n_in_two_type_variables_fn

- n_ints_in_fn

- n_ints_out_default_fn

- n_ints_out_fn

- n_polymorphic_in_fn

- n_polymorphic_out_default_fn

- n_polymorphic_out_fn

- n_polymorphic_restrict_in_fn

- n_polymorphic_restrict_out_fn

- negative_fn

- no_op_fn

- no_regularizer_fn

- NoGradient_fn

- nondifferentiable_batch_function_fn

- none_fn

- norm_fn

- not_equal_fn

- numpy_function_fn

- obtain_next_fn

- old_fn

- one_hot_fn

- ones_fn

- ones_like_fn

- op_scope_fn

- op_with_default_attr_fn

- op_with_future_default_attr_fn

- out_t_fn

- out_type_list_fn

- out_type_list_restrict_fn

- pad_fn

- parallel_stack_fn

- parse_example_fn

- parse_single_example_fn

- parse_single_sequence_example_fn

- parse_tensor_fn

- periodic_resample_fn

- periodic_resample_op_grad_fn

- placeholder_fn

- placeholder_with_default_fn

- plugin_dir

- plugin_dir_dyn

- polygamma_fn

- polymorphic_default_out_fn

- polymorphic_fn

- polymorphic_out_fn

- pow_fn

- print_fn

- Print_fn

- process_input_v4_fn

- py_func_fn

- py_function_fn

- qint16

- qint32

- qint8

- qr_fn

- quantile_accumulator_add_summaries_fn

- quantile_accumulator_deserialize_fn

- quantile_accumulator_flush_fn

- quantile_accumulator_flush_summary_fn

- quantile_accumulator_get_buckets_fn

- quantile_accumulator_is_initialized_fn

- quantile_accumulator_serialize_fn

- quantile_buckets_fn

- quantile_stream_resource_handle_op_fn

- quantiles_fn

- quantize_fn

- quantize_v2_fn

- quantized_concat_fn

- QUANTIZED_DTYPES

- quint16

- quint8

- random_crop_fn

- random_gamma_fn

- random_normal_fn

- random_poisson_fn

- random_shuffle_fn

- random_uniform_fn

- range_fn

- rank_fn

- read_file_fn

- real_fn

- realdiv_fn

- reciprocal_fn

- recompute_grad_fn

- reduce_all_fn_

- reduce_any_fn_

- reduce_join_fn

- reduce_logsumexp_fn_

- reduce_max_fn_

- reduce_mean_fn_

- reduce_min_fn_

- reduce_prod_fn_

- reduce_slice_max_fn

- reduce_slice_min_fn

- reduce_slice_prod_fn

- reduce_slice_sum_fn

- reduce_sum_fn_

- ref_in_fn

- ref_input_float_input_fn

- ref_input_float_input_int_output_fn

- ref_input_int_input_fn

- ref_out_fn

- ref_output_float_output_fn

- ref_output_fn

- regex_replace_fn

- register_tensor_conversion_function_fn

- reinterpret_string_to_float_fn

- remote_fused_graph_execute_fn

- repeat_fn

- report_uninitialized_variables_fn

- required_space_to_batch_paddings_fn

- requires_older_graph_version_fn

- resampler_fn

- resampler_grad_fn

- reserved_attr_fn

- reserved_input_fn

- reset_default_graph_fn

- reshape_fn

- resource

- resource_create_op_fn

- resource_initialized_op_fn

- resource_using_op_fn

- rest_of_the_axes

- restrict_fn

- reverse_fn_

- reverse_sequence_fn

- rint_fn

- roll_fn

- round_fn

- routing_function_fn

- routing_gradient_fn

- rpc_fn

- rsqrt_fn

- s

- s_dyn

- saturate_cast_fn

- scalar_mul_fn